research

my main interest is in visualizing, summarizing, and studying workflows.

below is a short list of some of the excellent people i have collaborated with over the past few years. none of the following work would have been possible without their help.

note: unless otherwise marked, all source and data available directly from this site is released under the

MIT license.

please send me an

email

if you find this work interesting or helpful.

journal and conference articles

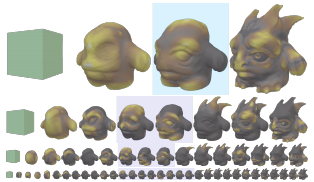

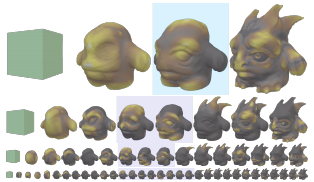

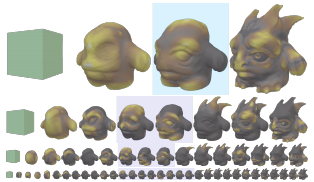

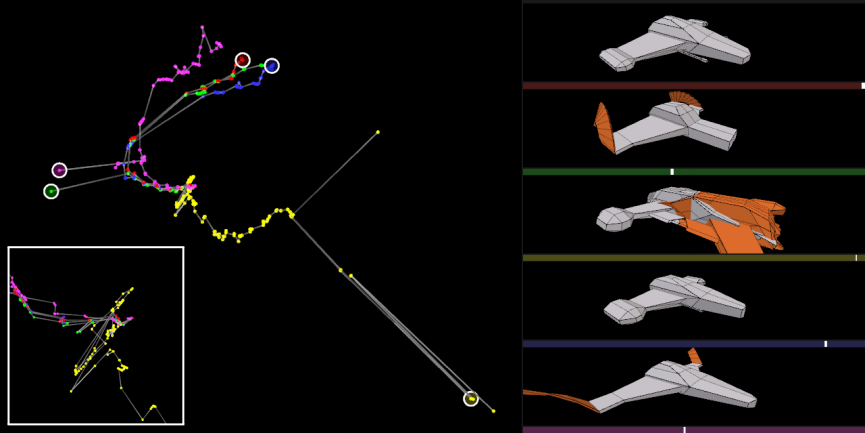

3dflow: continuous summarization of mesh editing workflows

j.d.denning, v.tibaldo, f.pellacini.

acm transactions on graphics (siggraph),

vol. 34, no. 4, article 140, jul 2015.

to be presented at siggraph 2015.

submission: paper video

more: fast-forward details

abstract:

Mesh editing software is improving, allowing skilled artists to create detailed meshes efficiently. For a variety of reasons, artists are interested in sharing not just their final mesh but also their whole workflow, though the common media for sharing has limitations. In this paper, we present 3DFlow, an algorithm that computes continuous summarizations of mesh editing workflows. 3DFlow takes as input a sequence of meshes and outputs a visualization of the workflow summarized at any level of detail. The output is enhanced by highlighting edited regions and, if provided, overlaying visual annotations to indicated the artist's work, e.g. summarizing brush strokes in sculpting. We tested 3DFlow with a large set of inputs using a variety of mesh editing techniques, from digital sculpting to low-poly modeling, and found 3DFlow performed well for all. Furthermore, 3DFlow is independent of the modeling software used because it requires only mesh snapshots, and uses the additional information only for optional overlays. We release 3DFlow as open source for artists to showcase their work and release all our datasets so other researchers can improve upon our work.

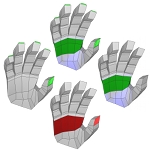

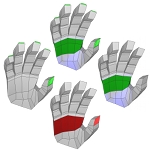

meshgit: diffing and merging meshes for polygonal modeling

j.d.denning, f.pellacini.

acm transactions on graphics (siggraph),

vol. 32, no. 4, article 35, jul 2013.

presented at siggraph 2013.

acm dl

submission: paper updated-source

presentation: pdf interactive-bin encore

source: bin-src-data

abstract:

This paper presents MeshGit, a practical algorithm for diffing and merging polygonal meshes typically used in subdivision modeling workflows. Inspired by version control for text editing, we introduce the mesh edit distance as a measure of the dissimilarity between meshes. This distance is defined as the minimum cost of matching the vertices and faces of one mesh to those of another. We propose an iterative greedy algorithm to approximate the mesh edit distance, which scales well with model complexity, providing a practical solution to our problem. We translate the mesh correspondence into a set of mesh editing operations that transforms the first mesh into the second. The editing operations can be displayed directly to provide a meaningful visual difference between meshes. For merging, we compute the difference between two versions and their common ancestor, as sets of editing operations. We robustly detect conflicting operations, automatically apply non-conflicting edits, and allow the user to choose how to merge the conflicting edits. We evaluate MeshGit by diffing and merging a variety of meshes and find it to work well for all.

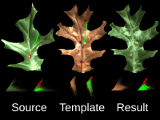

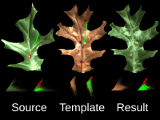

appwarp: retargeting measured materials by appearance-space warping

x.an, x.tong, j.d.denning, f.pellacini.

acm transactions on graphics (siggraph asia),

vol. 30, no. 6, article 147, dec 2011

presented at siggraph asia 2011.

acm dl

submission: paper supplemental-images video

abstract:

We propose a method for retargeting measured materials, where a source measured material is edited by applying the reflectance functions of a template measured dataset. The resulting dataset is a material that maintains the spatial patterns of the source dataset, while exhibiting the reflectance behaviors of the template. Compared to editing materials by subsequent selections and modifications, retargeting shortens the time required to achieve a desired look by directly using template data, just as color transfer does for editing images. With our method, users have to just mark corresponding regions of source and template with rough strokes, with no need for further input.

This paper introduces AppWarp, an algorithm that achieves retargeting as a user-constrained, appearance-space warping operation, that executes in tens of seconds. Our algorithm is independent of the measured material representation and supports retargeting of analytic and tabulated BRDFs as well as BSSRDFs. In addition, our method makes no assumption of the data distribution in appearance-space nor on the underlying correspondence between source and target. These characteristics make AppWarp the first general formulation for appearance retargeting. We validate our method on several types of materials, including leaves, metals, waxes, woods and greeting cards. Furthermore, we demonstrate how retargeting can be used to enhance diffuse texture with high quality reflectance.

meshflow: interactive visualization of mesh construction sequences

j.d.denning, w.b.kerr, f.pellacini.

acm transactions on graphics (siggraph),

vol. 30, no. 4, article 66, july 2011.

presented at siggraph 2011.

acm dl

submission: paper bin-code-data study data

videos: main biped helmet hydrant robot shark

presentation: pdf encore preview

abstract:

The construction of polygonal meshes remains a complex task in Computer Graphics, taking tens of thousands of individual operations over several hours of modeling time. The complexity of modeling in terms of number of operations and time makes it difficult for artists to understand all details of how meshes are constructed. We present MeshFlow, an interactive system for visualizing mesh construction sequences. MeshFlow hierarchically clusters mesh editing operations to provide viewers with an overview of the model construction while still allowing them to view more details on demand. We base our clustering on an analysis of the frequency of repeated operations and implement it using substituting regular expressions. By filtering operations based on either their type or which vertices they affect, MeshFlow also ensures that viewers can interactively focus on the relevant parts of the modeling process. Automatically generated graphical annotations visualize the clustered operations. We have tested MeshFlow by visualizing five mesh sequences each taking a few hours to model, and we found it to work well for all. We have also evaluated MeshFlow with a case study using modeling students. We conclude that our system provides useful visualizations that are found to be more helpful than video or document-form instructions in understanding mesh construction.

written in mono. uses opengl (opentk), alglib

coming soon: src

readme scripts bin v39615 patch keys change log

thanks to peter k.h. gragert for helping update this!

bendylights: artistic control of direct illumination by curving light rays

w.b.kerr, f.pellacini, j.d.denning.

computer graphics forum (eurographics symposium on rendering), 2010.

acm dl

submission: paper

abstract:

In computer cinematography, artists routinely use non-physical lighting models to achieve desired appearances. This paper presents BendyLights, a non-physical lighting model where light travels nonlinearly along splines, allowing artists to control light direction and shadow position at different points in the scene independently. Since the light deformation is smoothly defined at all world-space positions, the resulting non-physical lighting effects remain spatially consistent, avoiding the frequent incongruences of many non-physical models. BendyLights are controlled simply by reshaping splines, using familiar interfaces, and require very few parameters. BendyLight control points can be keyframed to support animated lighting effects. We demonstrate BendyLights both in a real-time rendering system for editing and a production renderer for final rendering, where we show that BendyLights can also be used with global illumination.

talks

the maths and algorithms behind photo-realistic graphics

frank s. brenneman lecture series, tabor college, ks

2015 april 24, invited talk

presentation: pdf interactive

abstract:

Rendering systems today are able to produce images that are indistinguishable from actual photographs. In this talk, I will present the good, the bad, and the weird parts of the math and algorithms behind photo-realistic graphics.

using monte carlo integration to solve the rendering equation

frank s. brenneman lecture series, tabor college, ks

2015 april 24, invited talk

presentation: pdf interactive

abstract:

Continuing on from the previous talk (the maths and algorithms behind photo-realistic graphics), I will present the Monte Carlo integration method, the method behind solving the rendering equation.

mesh(flow|git): understanding and managing mesh editing workflows

blender conference 2014, amsterdam link

2014 october 26

presentation: pdf video part 1 video part 2

abstract:

Mesh editing videos and tutorials are essential for learning, but are either too short for details or too long to stay interesting. Further, comparing final meshes requires manual search and find. I present my Ph.D. dissertation work on understanding mesh editing workflows and some preliminary but ongoing work.

developing and designing powerful modeling tools

blender conference 2014, amsterdam link

co-presented with jonathan williamson

2014 october 24

video

abstract:

This talk will explore the aspects of designing, developing, and implementing modeling tools such as the Contours and Polystrips retopology add-ons. Along side sharing our experiences, this will open many points for discussion on how we can further improve Blender’s core modeling tools and the Blender python API to allow more powerful add-ons and easier development.

open-source mindset and science

science seminar, taylor university

2014 october 20, invited talk

presentation: pdf

abstract:

Large amounts of data are involved in studying how 3D artists create their work, and the gathering of this data can be challenging. In this talk, I present my observation of differences in "closed-source" and "open-source" 3D artist communities.

kernel nystrom method for light transport

jiaping wang, yue dong, xin tong, zhouchen lin, baining guo

a technical papers presentation by jon denning

slides slides+media

ph.d. thesis

modflows: methods for studying and managing mesh editing workflows

submission: paper

presentation: pdf

abstract:

At the heart of computer games and computer generated films lies 3D content creation. A student wanting to learn how to create and edit 3D meshes can quickly find thousands of videos explaining the workflow process. These videos are a popular medium due to a simple setup that minimally interrupts the artist's workflow, but video recordings can be quite challenging to watch. Typical mesh editing sessions involve several hours of work and thousands of operations, which means the video recording can be too long to stay interesting if played back at real-time speed or lose too much information when sped up. Moreover, regardless of the playback speed, a high-level overview is quite difficult to construct from long editing sessions.

In this thesis, we present our research into methods for studying how artists create and edit polygonal models and for helping manage collaborative work. We start by describing two approaches to automatically summarizing long editing workflows to provide a high-level overview as well as details on demand. The summarized results are presented in an interactive viewer with many features, including overlaying visual annotations to indicate the artist's actions, coloring regions to indicate strength of change, and filtering the workflow to specific 3D regions of interest. We evaluate the robustness of our two approaches by testing against a variety of workflows, holding a small case study, and asking artists for feedback.

Next we describe a way to construct a plausible and intuitive low-level workflow that turns one of two given meshes into the second by building mesh correspondences. Analogous to text version control tools, we visualize the mesh changes in a two-way, three-way, or sequence diff, and we demonstrate how to merge independent edits of a single original mesh, handling conflicts in a way that preserves the artists' original intentions.

We then discuss methods of comparing multiple artists performing similar mesh editing tasks. We build intra- and inter-correspondences, compute pairwise edit distances, and then visualize the distances as a heat map or by embedding into 3D space. We evaluate our methods by asking a professional artist and instructor for feedback.

Finally, we discuss possible future directions for this research.

undergraduate research

exploring expressiveness of tiled texture mapping

spring 2017

abstract:

accelerated, efficient, and artist-friendly rendering of boolean scenes in glsl

spring 2016–fall 2016

abstract:

using neural networks to optimize filters for noisy path-traced images

fall 2016–spring 2017

abstract:

using genetic algorithms to improve robocup virtual soccer players

summer 2015

abstract:

generating, simulating, and rendering procedurally-generated planetoids

summer 2015

abstract:

exploring novel meta programming language

spring 2015–fall 2015

abstract:

technical reports

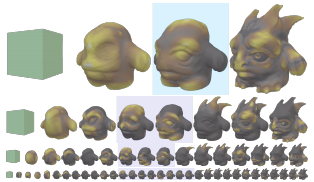

3dflow: continuous summarization of mesh editing workflows

j.d.denning, f.pellacini.

dartmouth computer science technical report tr2014-757, june 2014.

submission: paper supplemental

abstract:

Mesh editing software is continually improving allowing more detailed meshes to be created efficiently by skilled artists. Many of these are interested in sharing not only the final mesh, but also their whole workflows both for creating tutorials as well as for showcasing the artist's talent, style, and expertise. Unfortunately, while creating meshes is improving quickly, sharing editing workflows remains cumbersome since time-lapsed or sped-up videos remain the most common medium. In this paper, we present 3DFlow, an algorithm that computes continuous summarizations of mesh editing workflows. 3DFlow takes as input a sequence of meshes and outputs a visualization of the workflow summarized at any level of detail. The output is enhanced by highlighting edited regions and, if provided, overlaying visual annotations to indicate the artist's work, e.g., summarizing brush strokes in sculpting. We tested 3DFlow with a large set of inputs using a variety of mesh editing techniques, from digital sculpting to low-poly modeling, and found 3DFlow performed well for all. Furthermore, 3DFlow is independent of the modeling software used since it requires only mesh snapshots, using additional information only for optional overlays. We open source 3DFlow for artists to showcase their work and release all our datasets so other researchers can improve upon our work.

sculptflow: visualizing sculpting sequences by continuous summarization

j.d.denning, f.pellacini, j.ou.

dartmouth computer science technical report tr2014-759, june 2014.

submission: paper

abstract:

Digital sculpting is becoming ubiquitous for modeling organic shapes like characters. Artists commonly show their sculpting sessions by producing timelapses or speedup videos. But the long length of these sessions make these visualizations either too long to remain interesting or too fast to be useful. In this paper, we present SculptFlow, an algorithm that summarizes sculpted mesh sequences by repeatedly merging pairs of subsequent edits taking into account the number of summarized strokes, the magnitude of the edits, and whether they overlap. Summaries of any length are generated by stopping the merging process when the desired length is reached. We enhance the summaries by highlighting edited regions and drawing filtered strokes to indicate artists’ workflows. We tested SculptFlow by recording professional artists as they modeled a variety of meshes, from detailed heads to full bodies. When compared to speedup videos, we believe that SculptFlow produces more succinct and informative visualizations. We open source SculptFlow for artists to show their work and release all our datasets so that others can improve upon our work.

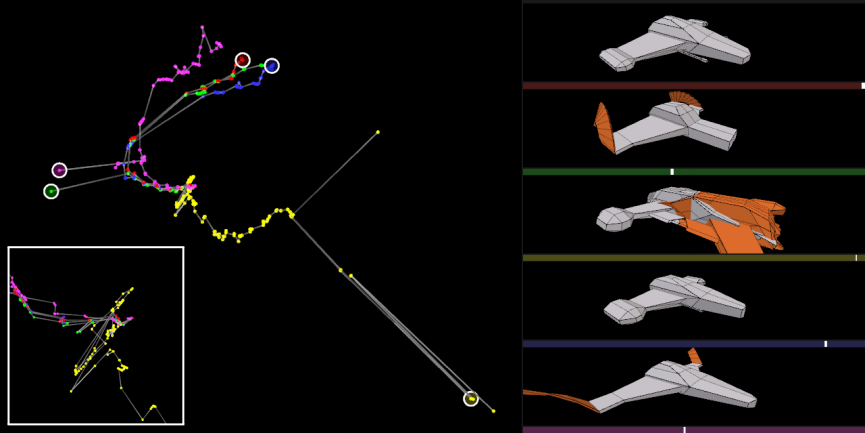

crosscomp: comparing multiple artists performing similar modeling tasks

j.d.denning, f.pellacini.

dartmouth computer science technical report tr2014-760, june 2014.

submission: paper

abstract:

In two previous papers, we have focused on summarizing and visualizing the edits of a single workflow and visualizing and merging the edits of two independent workflows. In this paper, we focus on visualizing the similarities and dissimilarities of many workflows where digital artists perform similar tasks. The tasks have been chosen so each artist starts and ends with a common state. We show how to leverage the previous work to produce a visualization tool that allows for easy scanning through the workflows.

meshgit: diffing and merging polygonal meshes

j.d.denning, f.pellacini.

dartmouth computer science technical report tr2012-722, may 2012.

submission: paper

abstract:

This paper presents MeshGit, a practical algorithm for diffing and merging polygonal meshes. Inspired by version control for text editing, we introduce the mesh edit distance as a measure of the dissimilarity between meshes. This distance is defined as the minimum cost of matching the vertices and faces of one mesh to those of another. We propose an iterative greedy algorithm to approximate the mesh edit distance, which scales well with model complexity, providing a practical solution to our problem. We translate the mesh correspondence into a set of mesh editing operations that transforms the first mesh into the second. The editing operations can be displayed directly to provide a meaningful visual difference between meshes. For merging, we compute the difference between two versions and their common ancestor, as sets of editing operations. We robustly detect conflicting operations, automatically apply non-conflicting edits, and allow the user to choose how to merge the conflicting edits. We evaluate MeshGit by diffing and merging a variety of meshes and find it to work well for all.